Use a webcam to turn motion into music

Un article de Peter Kirn extrait de MAKE: vol.4.

A tutorial from MAKE: vol.4 by Peter Kirn

MAKE: est un magazine (papier) formidable, mais aussi un blog plein de ressources. Voir notamment Instructables.

Je livre ici un article extrait du n°4. Je n’ai pas le temps de le traduire pour l’instant, mais rien de bien difficile à comprendre.

J’ai pensé que cet article proposait une solution amusante à monter et à expérimenter. Elle fait appel à des outils intéressants à connaître. On y va. Let’s go !

Leon Theremin introduced the world to music made from the ether in 1919 with the Thereminvox: wave your hand through the air, and the tube-based instrument produced sound. Now it’s possible to realize new possibilities for controlling sound in the air, all with a cheap webcam and off-the-shelf DIY software.

You’ll need several elements to pull this trick off :

1. A webcam.

2. Motion analysis or « computer vision » software.

3. A means of translating the motion analysis into control (usually MIDI).

4. Something to control, like software synthesizer settings or effects parameters.

The easiest part of the equation is the webcam. l’ve used both an Apple iSight and a Logitech QuickCam Pro for Notebooks; I prefer the Logitech camera because it’s cheaper and works well on both Windows and Mac.

There are many forms of video motion analysis, including motion detection and motion tracking. Motion detection responds to any change in the color of specified pixels anywhere in a video source. It can tell if something is moving, but it can’t track the direction of a pixel or object through space. Since you can divide the image into a grid, motion detection works weil for camera-controlled drum machines; each grid section triggers to a different percussion sound.

Motion tracking is more sophisticated, tracking a pixel (or object) through space. For continuous control of parameters, like a video theremin, controlling an effect, or scratching in air, you’lI need some form of motion tracking. Implementing motion tracking from scratch is thorny stuff, but there are many off-the-shelf solutions, a number of them free. Interactive toolkits let you create your own custom software by « patching » visually instead of coding, ideal for handling the motion data flexibly. By transmitting MIDI, they can also be used to control instruments and effects software.

On Windows, the free software EyesWeb is perfect; it’s designed specifically for experimenting with computer vision and has plenty of tweakable parameters.

Mac and Linux users can combine the interactive toolkit Pure Data,with PiDiP (recursive acronym for Definitely in Pieces). Both PD and PiDiP are open source, though they’re a little less robust when it comes to motion tracking. The best cross-platform solution is Max/MSP and Jitter from Cycling ’74 (Mac/Windows); it lacks the ingredients for computer vision but has extensive modular MIDI, audio, video, and number-crunching capabilities. Two cross-platform add-on libraries for Jitter include a full toolkit of computer vision capabilities: the commercial Tap.Tools ($65 – $119, ) and cv.jit (free). I regularly use both.

Max/MSP/Jitter is an expensive solution, costing hundreds of dollars, but to get you started, l’ve created a simple standalone application developed in Jitter that does the work for you, converting motion tracking to MIDI data. The download, instructions, and details on how it was made are available on the MAKE site.

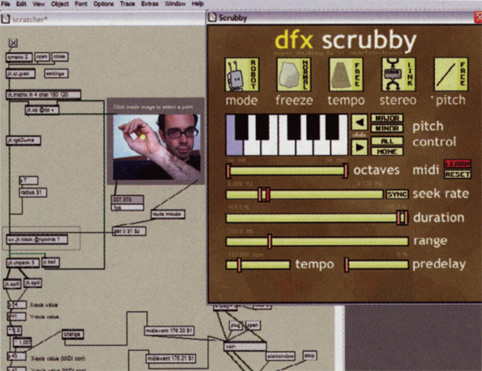

A custom Cycling ’74 Max/MSP and Jitter patch does the work, with the help of the free cv.jit add-on. That yellow dot on top of my hand is tracking movement, which can be routed to an instrument or effect in the form of MIDI data. Here, l’m using the free Windows/Mac VST effect plug-in DFX Scrubby to mangle a looped groove.

You may still want to build your own tool using one of the aforementioned options, but trying out this tool with your favorite instruments and effects will give you a sense of what does and doesn’t work. Some quick fixes to tracking problems include using a brightly colored object for tracking, or shining a small flashlight at the camera (perfect for darkened on stage performances.)

You can construct your own audio functionality using these applications’ modular capabilities, or you can route MIDI data to your audio software of choice. Mac users can route MIDI between applications, easily using the Inter Application Communication (lAC) driver; select lAC in the sending and receiving application, or another virtual in/out, and you’re done. Windows users need to install the MIDI-routing tool MIDI Yoke (http://midiox.com/).

Lastly, you need something to control. I wanted « virtual scratching » ability, so I could wave my hands to shuffle and distort beats. The free audio instrument Musolomo (Mac) fit the bill perfectly. Destroy FX’s donationware Scrubby and Buffer Override (Windows/Mac) also work well, though for these you’lI need a host that supports sending MIDI to effects, like the free modular host Buzz (Windows).

Put it all together, and you’ve got video control of audio. You’ll need to experiment with lighting, positioning, and your technique, but as with the original theremin, the effort is part of the fun. Especially when the reward is magically pulling music from the ether.

Peter Kirn is a composer/media artist and editor of createdigitalmusic.com

As an author for makezine : http://makezine.com/pub/au/Peter_Kirn

Voir aussi : http://www.instructables.com/

octobre 26th, 2015 à 21:24

[…] http://www.multimedialab.be/blog/?p=1016 […]